Understanding Autonomous Vehicle Tech Stacks - Carla - v0.0.2

Since my last post a lot has happened in my tech world. I purchased a new desktop with a powerful gpu, 64gb of ram, and a 1TB harddrive. I installed Ubuntu, setup my development environment and programatically ran a simple car simulation in Carla.

CARLA is a free, open-source simulator built on Unreal Engine that makes it easier for people to work on autonomous driving tech. It was started by Germán Ros and Professor Antonio M. López at the Computer Vision Center in Barcelona, with the goal of making self-driving research more accessible.

Intel helped fund the project early on, and Unreal Engine was a perfect fit because it's free and gives full access to the source code—so researchers and developers can tweak it however they need. Since its launch at CoRL in 2017, CARLA's grown a lot, with over 1,600 active users including universities and R&D teams from various companies.

What’s cool is that CARLA really levels the playing field. Whether you're a big company or just a small team, you can jump in, contribute, and push autonomous vehicle development forward.

Germán Ros now works for Nvidia creating new products in the space of Simulation for Autonomous Driving, focused on sensor simulation and tooling for autonomous systems validation. omniverse . His website

Very inspiring!

Local Setup

I was able to get Carla version 0.9.15 installed by extracting the zipped tar file and running the simulator file.

- https://github.com/carla-simulator/carla

- https://carla.readthedocs.io/en/latest/

- https://www.unrealengine.com/pt-BR/spotlights/carla-democratizes-autonomous-vehicle-r-d-with-free-open-source-simulator

A small screen appears and I can see the city loaded.

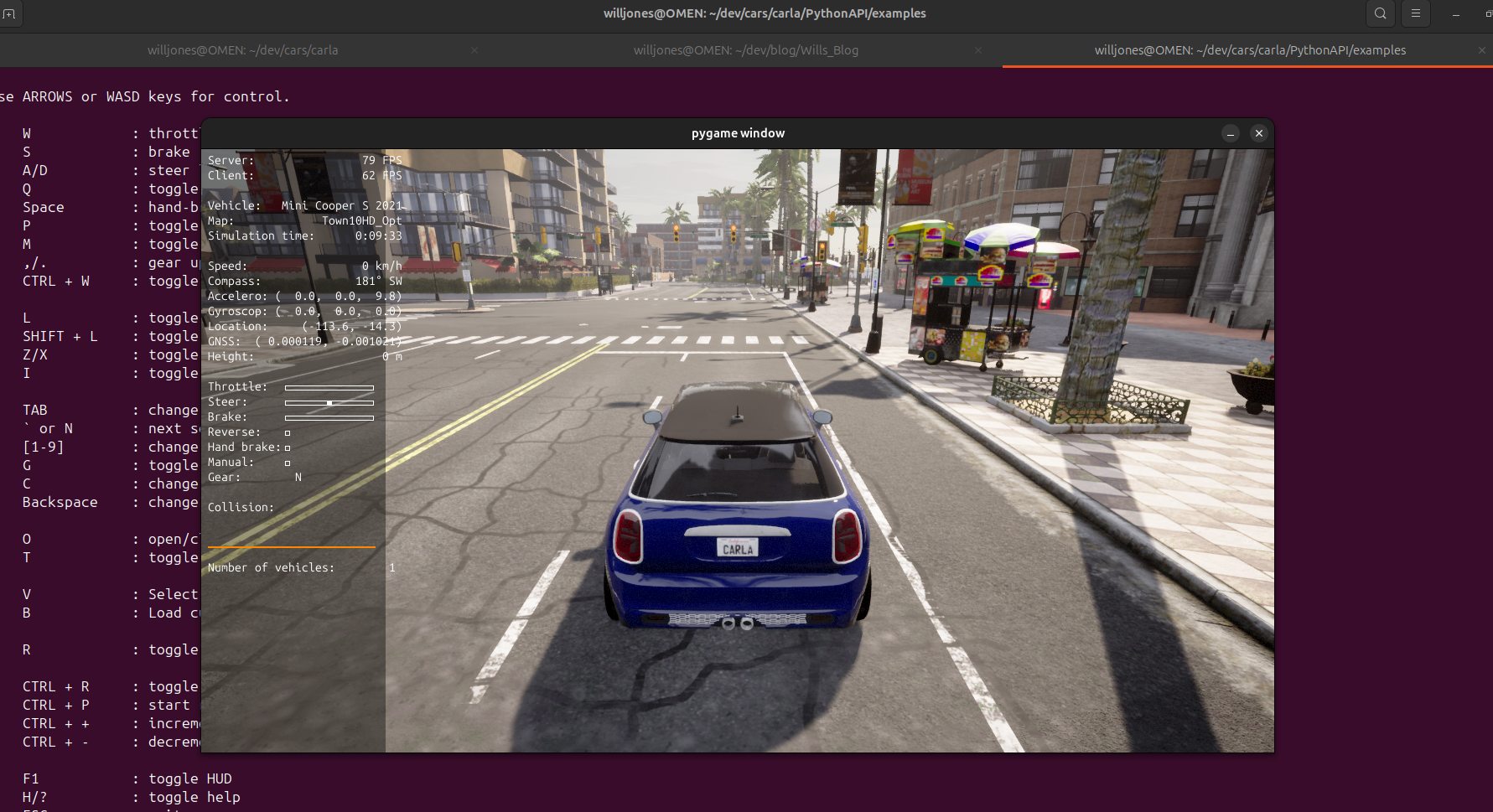

The installation comes with a set of examples using Carla's python API. For this blog I ran the the manual_control.py which allows me to drive a car like a video game with wasd keys. All of the details about connecting to the Carla host server, spawning the vehicle, weather, location, cameras, sensors, and manual controls are configured in the code.

I run the script and a second screen appears with a car.

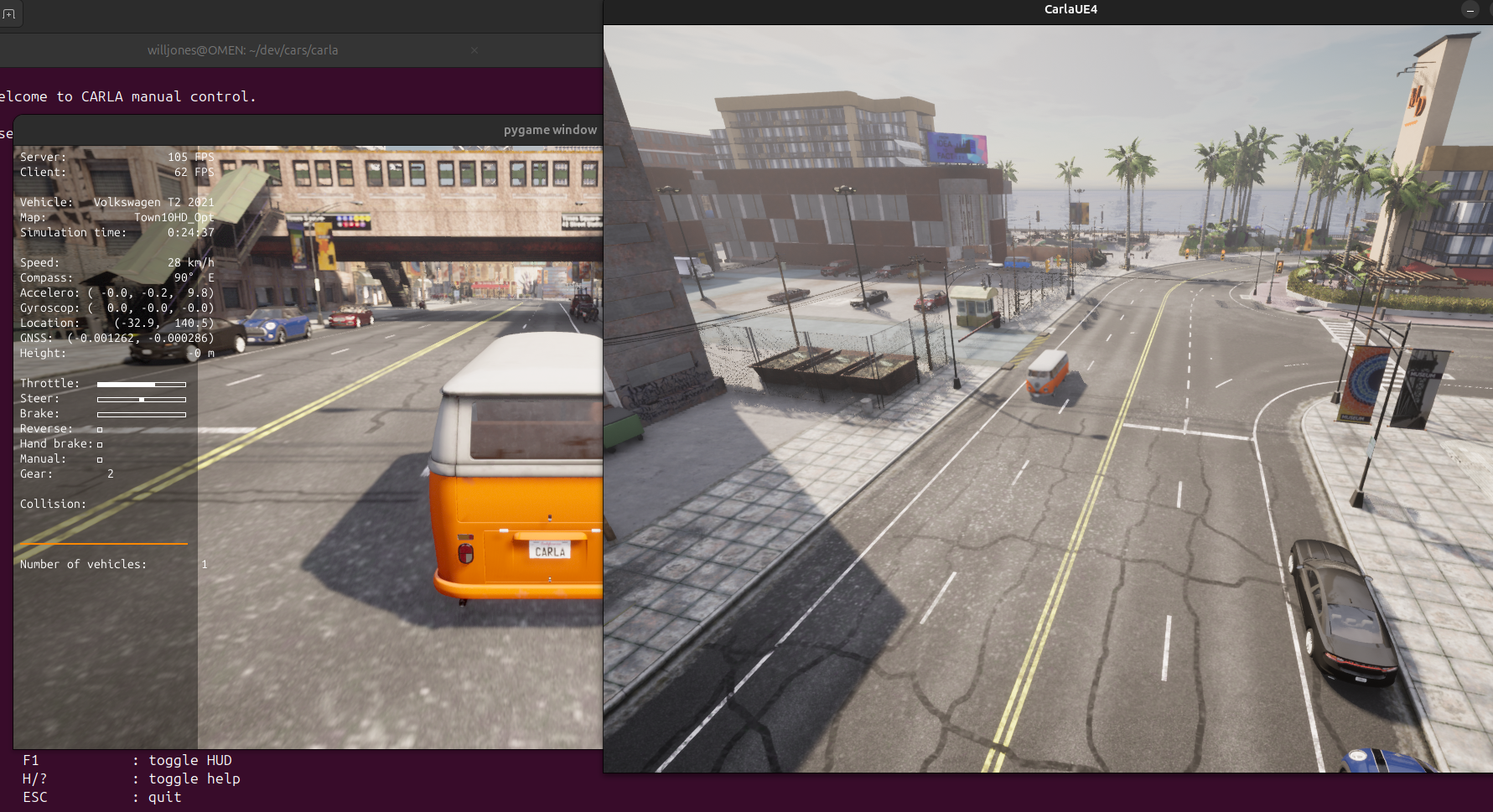

Reading up on the controls I can turn on auto pilot and watch the car begin to drive around. With the second screen connected to the host server, I can fly around in the initial screen and find the car navigating the city. A picture of how this could be used to spawn a city of cars all using autonomous driving algorithms begins to synthesize.

Now that I have the simulator running I am thinking my next goal will to use perception from the car to make real time decisions. Carla allows me to attach sensors like RGB camera, Depth camera, LIDAR, Semantic segmentation to a vehicle and transmit those signals to my program for controlling the car.

This is a large step that will comprise of many small steps and could be as simple or complicated as one could imagine. Ill need to define the scope as I think more my goal.

I also want to learn more about the machine learning models that train on the data received from car sensors. The largest one that comes to mind is video or image and object detection. First things first... I will learn how to transmit any kind of sensor data and interact with it through code.

I will check back in once I am writing my own Carla code.

Cheers!

Will